I remember at school when they started wording problems in ways that tried to match the real world. Fill in the blank questions like:

6 – 2 – 2 = ?

were replaced with:

“Billy has 6 sweets and gives 2 each to Bobby and Jill. How many sweets does Billy have left?”

The same, somewhat contrived, sort of examples showed up as I progressed through school and university. The point I think my teachers were trying to make is that even if a maths problem is about subtraction, in the real world it will be described in terms of Billy and his friends. People that really understand maths see the connection, get out their calculators and know to hit the minus key…

Since, so far at least, machine learning at Coursera remains an application of numerical analysis (specifically a lot of matrix manipulation relying on unseen proofs from the magical wonderland of linear algebra), machine learning can only be unlocked by those who have a good sense of how to turn real world problems into matrices of numbers they can feed into a computer running the right algorithm. And to be fair, the course teacher is doing a good job at helping us see how real world problems can be turned into “The Matrix”.

Here’s an example they gave us a few weeks ago: We watched a video explaining how a neural network was taught how to turn a steering wheel of a moving car back in the 90s. It was pretty simple in essence. While a human was driving, the vehicle had a camera mounted on the front, and every couple of seconds (processing power was not so great back then) a photo was taken, processed and down-sampled to a very low-res black and white image of the road ahead – something like 200 x 150 pixels I think, maybe less. When we saw it, essentially the road ahead was black; everything else was more or less grey. To the computer, this image was just a long stream of numbers (200 x 150 = 30,000 numbers to be precise) where each number represented the blackness of the image at each of the pixel spots. At the same time, the position of the steering wheel (how left or right it was currently turned) was turned into a number and stored with the image numbers. In ML parlance, the features (X) were the photo numbers, the output label (y) was some number representing the L-R position of the steering wheel. By dumping enough of these examples into a neural network, the system could figure out that certain patterns within these 30,000 numbers corresponded to one number representing the position of the steering wheel. Thus, once the network had been trained, when it saw similar patterns of numbers coming into it, it could predict where the steering wheel should be set and turn it accordingly.

Obviously, at such low-resolutions and such gaps between images, it wouldn’t have been very good at avoiding children running onto the road, but you could see that with higher resolution images being taken more regularly how the basic set-up could scale to handle more complex situations, and maybe even start to handle the position of the accelerator.

So, this (and probably the next) post will be about a couple of ideas I have had for turning some real world problems into numbers.

The Game of Mancala

My uncle gave us this game of (probably) African origins for Christmas. It is a two player game. Each player has 6 ‘pits’ with 4 ‘stones’ in it – a total of 48 stones. The aim of the game is to have more stones than your opponent when there are no more stones left in either your or your opponent’s side. The full rules are very simple, yet there is still quite a bit of strategy and thinking ahead involved if you want to win. My seven year-old daughter lost interest in chess (probably because her dad took too long choosing his moves) in favour of this, a much faster-moving game. So much so, that she now regularly beats most adults (including me) most of the time!

So, as a matter of paternal pride, I wondered if I could apply machine learning to this problem and have a computer program I have built beat her in this game, even if these days, I can rarely pull this off myself.

The way the alpha-GO team taught a computer to become a world champion beating Go player, was by having their AI play itself a zillion times, and learn what moves lead to ultimate victory. By recognising the patterns of victory, it was able to secure victory against humans in a very short time-span. My plan is to do the same with Mancala. It’s a much simpler game with far fewer potential moves each round and far fewer total moves per game, so it should be a lot less storage and processor intensive – and therefore it should be a lot easier for it to crush my seven-year old daughter. Not that I have any emotional issues about this of course.

Obviously, a program to play a game has a lot more to it than the algorithm that determines best moves. But since this is a blog about machine learning, let’s assume that I have written all the code necessary to select valid moves, animate the board and handle the other general game play stuff. Right now, I just want to talk about how I might represent game state at each turn and feed that into a machine learning algorithm – probably a neural network.

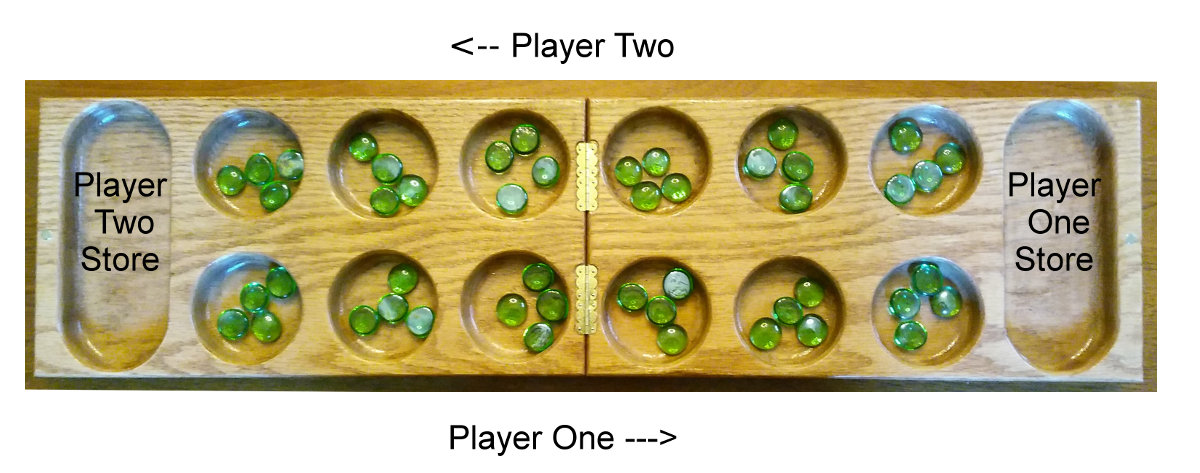

This is what the board looks like at the start of the game:

Each player has 24 stones, four in each of their six pits. For a turn, the player picks up all the stones in one of the pits on their side, and in an anti-clockwise direction, drops a single stone into each pit until they run out of stones. They can drop a stone in their own store as they go past, but not in the other player’s store. So, game representation could be pretty simple: the number of stones in each of the six pits, plus the two stores and the number of the player whose turn it currently is. So, the above state of game start could be represented by 15 integers, maybe something like:

[4 4 4 4 4 4 0 | 4 4 4 4 4 4 0 | 1]

The first six positions would be the number of stones in Player One’s pits, the next position the number of stones in Player One’s store. The next seven positions are the same – but for Player Two. The final number is the number of the player whose turn it is. I have put some | symbols in make it easier to for humans to read.

Lets say Player One picked up the four stones in the right most pit on their side. They would then drop one stone in their own store, and continuing in an anti-clockwise direction, drop the remaining three into the three right-most pits of their opponent. It would then be Player Two’s turn. Game state might then look like:

[4 4 4 4 4 0 1 | 5 5 5 4 4 4 0 | 2]

Game play continues until one player no longer has any stones in their side of the board. Their opponent then puts all the stones they have in their side of the board into their own store, and the number of stones each player has in their store is counted up. The winner is the player with the most number of stones in their store. There are some other rules I have not explained that enable a player to capture some of their opponents stones, and also get extra turns – but for the encoding part of the problem, those rules aren’t important.

Although a player might have a temporary advantage earlier in the game by having more stones in their pit than their opponent, victory is only assured at the end. It doesn’t matter how many more stones you have than your opponent – only that you have more. Of course, a more skilled player would normally win by a greater margin, and so it would probably be advantageous to track that.

So, how to store all this in feature (X) and label (y) matrices that could be fed into a neural network?

I think the simplest way would just be to store two consecutive game states (the 15 integers x 2 that were shown above) into an X matrix. The label (y) could just be a 0 or a 1 – indicating whether or not the player who has just played ultimately won or lost. But if we wanted to record the margin of victory, it could be an integer between -24 and +24. In this game, the total number of stones is 48 – although it would be impossible for one player to win ALL the stones. The greater the positive margin, the greater the victory over their opponent. Therefore, the sequence of moves by a player who ultimately won would have a positive value in the labels (y) for all the game states of that game. During game play, we just need to store all the moves in order. After a game was finished and the result known, it would be a simple matter of concatenating all the consecutive moves into 2-tuples (e.g. moves 1 and 2, then moves 2 and 3, then moves 3 and 4 etc) as our features (X) and add the final the result of the winning/losing margin to each as the label (y). So, let’s say, Player One won a game by a margin of 5 stones. And Let’s say that Player Two’s first move earned them a second turn. The first few elements of the training set might be:

| Features (X) | Label (y) | Comments |

|---|---|---|

444444 0 444444 0 | 1 | 444440 1 555444 0 | 2 |

5 |

Player 1 starts, adds 1 to their store |

444440 1 555444 0 | 2 | 444440 1 506555 1 | 2 |

-5 |

Player 2 gets another turn, adds 1 to their store |

444440 1 506555 1 | 2 | 444440 1 017666 1 | 1 |

-5 |

Player 2 doesn’t add to their store, back to Player 1 |

After each game (or maybe after every 10 games), the neural network could be retrained with the new training data of the latest games’ moves and results. When the next game starts, for each turn, the computer would test all the valid moves to predict the potential finishing score for the game, and choose the one that was most likely to result in a win by the largest margin.

At the start of the training sequence when the neural network is just loaded with random weights, it would just be playing random legal moves, but hopefully in time better moves will be selected more often as random good moves are picked over random bad moves. It might even be advantageous to discard early training data from the network – since a move that lead to a win when both players are playing as skilfully as Homer Simpson (like the above poor starting moves from both players) is just as likely to be a lousy move when a real strategy starts to develop. But maybe the neural network will figure this out as it goes… Anyway that has nothing to do with encoding the problem and is something I might address at a later stage if I ever try to develop this into some working software.

Since there are at most only twelve possible moves a player can make at each stage of the game (and often far less), there are way less possible combinations than in a more complex game like chess, or a game with a larger board like Go. Although there are probably at least tens of thousands of different ways a game could go, it likely would not take too long before the computer had played through pretty much all the significant combinations of the game to see which ones typically led to victory and could accurately choose them each time.

Watch out kiddo!

Coincidentally, your brother-in-law this past summer was sitting in front of a Mancala board, playing it with his kids, wondering how well a neural network approach would work. Can’t wait to watch your daughter vs The Matrix. (make sure you get it on video!)

You bet!